Topic

AI

Published

October 2025

Reading time

6 minutes

Google's End of &num=100

A Pre-Remedy Move to Fortify its Ecosystem?

Authors

Google's End of &num=100

Download ArticleResearch

In mid-September 2025, Google quietly removed support for the &num=100 parameter, which had allowed users to view up to 100 search results on a single page instead of the default 10.1 The feature was a staple for researchers, SEO analysts, and developers who relied on it to collect and analyze larger datasets efficiently. For example, an SEO analyst at a marketing agency might use &num=100 when researching how a client’s website ranks for a keyword like “activant research” (e.g., https://www.google.com/search?q=activant+research&num=100 ). By seeing all 100 search results at once, they can quickly scan competitors, identify trends in top-ranking pages, and export the full list for analysis without clicking through multiple pages.

While the shift affects reporting rather than rankings, it disrupts a decade of SEO trendlines and makes large-scale scraping materially harder. Expect cleaner Google Search Console metrics, higher costs for scrapers and agents, and more control in Google’s hands.2

The real question is whether this was a direct response to the DOJ’s antitrust ruling and a pre-remedy move to fortify Google’s position ahead of court-mandated data sharing.

What changed?

From September 10–14, 2025, industry data shows widespread drops in Search Console impressions and keyword counts, while clicks and actual rankings remain largely unchanged.3 This timing aligns with the disablement of the &num=100 parameter, suggesting the decline reflects reduced query-level visibility and data availability rather than real traffic loss or ranking shifts. This means:

- Cleaner data: Fewer “deep-page” bot impressions are counted, lowering total impressions but raising average positions since lower-ranking pages no longer dilute results. Click-through rates now better reflect genuine user behavior.3

- Higher scraping costs: Rank trackers and AI agents must now paginate, issuing 10X more requests and hitting rate-limits and CAPTCHAs more frequently.

- Measurement: For over a decade, tools supporting visibility indices, rank tracking and market share modeling relied on the parameter. Its removal disrupts historical trendlines and breaks backward comparability.

Was this strategic?

Although Google has not made a formal announcement, the quiet removal of the parameter appears deliberate.4 The move is best understood not as a single action, but as a calculated step in a much larger strategy involving data control, antitrust pressure, and the future of generative AI.

Raising the Walls Against Scrapers

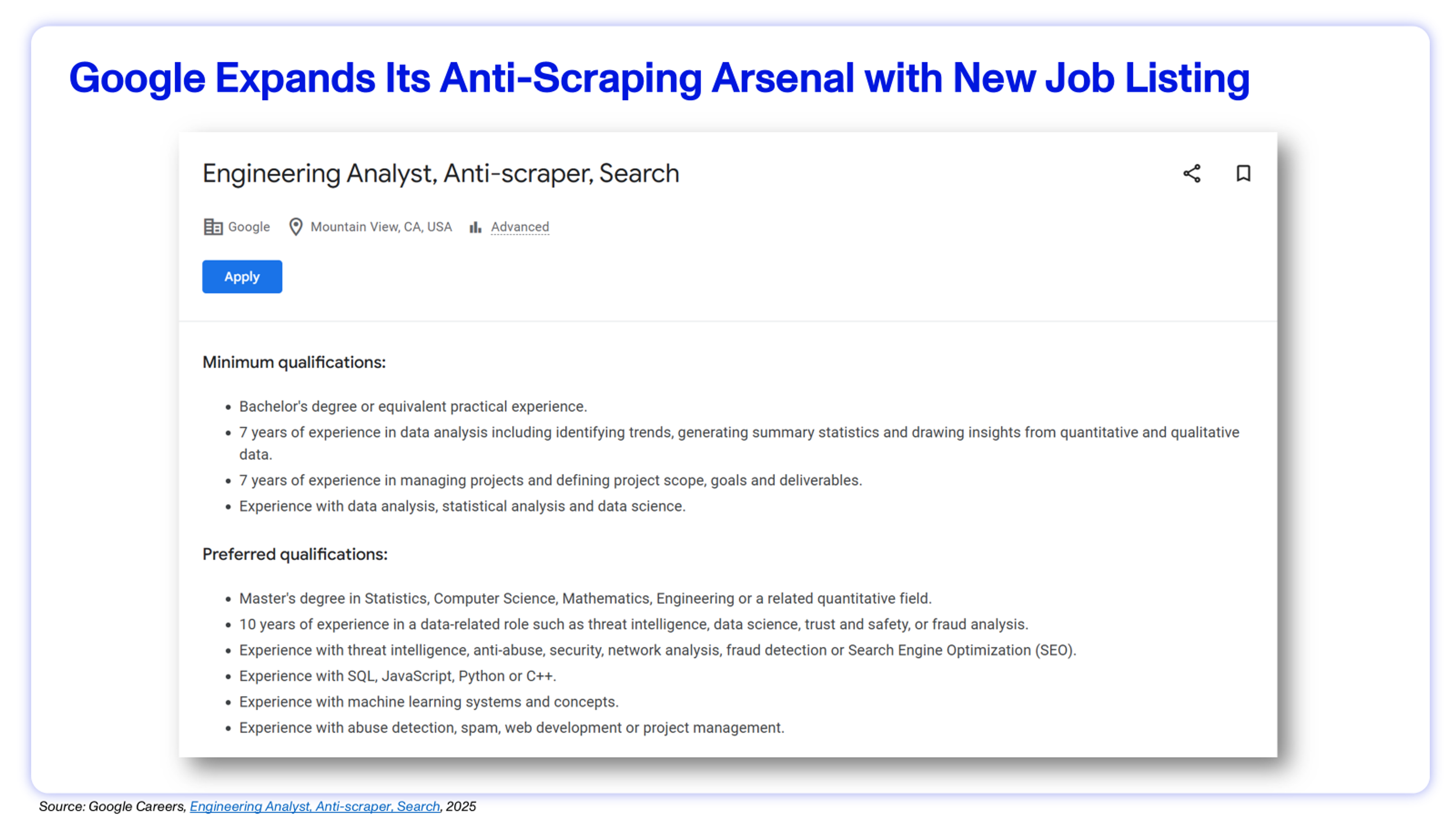

The immediate effect of disabling &num=100 is to restrict bulk retrieval of search results that have long powered SEO tools, competitive intelligence platforms, and AI training pipelines. Historically, scraped result pages have fueled everything from market share trackers to retrieval-augmented generation (RAG) systems. Because Google offers no official Search API with full access to live results, third-party services like SerpAPI emerged to provide this data programmatically. Last year, it was reported that AI firms like OpenAI and Perplexity relied on SerpAPI to fetch live results for real-time answers.5 This change aligns with Google’s broader anti-scraping campaign, which includes hiring for an “Anti-Scraping Engineering Analyst”, deploying tighter CAPTCHAs, and improving bot detection. Together, these actions point to a concerted effort to raise the cost of large-scale data harvesting and preserve the exclusivity of its search data.6

Research

Pre-Positioning for Antitrust Remedies

This defensive move appears to be part of Google’s pre-remedy positioning ahead of court-mandated data-sharing measures stemming from the DOJ’s antitrust case. The recent ruling requires Google to provide rivals with certain search index and user-interaction data (including a one-time snapshot of URLs and webpages it has indexed).7,8 Furthermore, Google must operate a temporary syndication program to help new entrants cover long-tail and local queries while they build their own indexes.7

These long-tail queries are rare, specific searches that make up most query volume, and illustrate the industry’s “80/20 problem”. While covering the first 80% of common queries is relatively achievable, the remaining 20% requires massive, constantly refreshed data and large-scale click-feedback. Court testimony from Microsoft and DuckDuckGo emphasized that this user-side and tail-query data is critical for any competitor to close the gap, and that LLMs alone cannot substitute for it.10

Cementing Control of the GenAI Data Layer

Google’s vast index gives it a clear and powerful advantage in handling these long-tail queries. The strategic importance of this data was further underscored in testimony from Microsoft and OpenAI, which argued that limited access to high-quality search APIs is an “innovation killer” for GenAI products.10 OpenAI explicitly testified that access to Google’s API would materially improve its models’ reliability and user experience.10

Given these coming regulatory requirements, curbing unauthorized scraping activity makes perfect strategic sense. Disabling &num=100 raises the cost of bulk data extraction, preserving Google’s long-tail data advantage until formal data-sharing channels take effect. This effectively steers competitors away from unauthorized scrapers and toward regulated, auditable data sources. If these new remedies succeed, smaller General Search Engines (GSEs) and GenAI entrants could use Google’s index breadth and click signals to narrow the 80/20 gap in search coverage; if they falter, Google’s integration of AI Overviews (AIO) with Search will only reinforce its current data lead.

Implications for Founders (especially AI and data products)

- RAG/agents: Budget for higher retrieval latency and cost if you depend on live SERPs. Add caching, batch queries, and consider licensed feeds or partnerships.

- Training data: Expect shallower long-tail coverage from naive scraping, skewing toward mainstream domains unless you diversify sources. The resulting bias favors established publishers and commercial domains.

- SEO Measurement: Reset baselines from Sept 12, 2025. Compare clicks and conversions, and annotate the impressions break in all dashboards.1

- Go-to-market: If your product resells search-derived metrics (rank, visibility, share of voice), communicate the methodology change and re-benchmark. SISTRIX, among others, has already adjusted collection methods.12

- Enterprise Search: Expect differentiation to hinge on proprietary data access, domain-specific embeddings, and user-context integration. The moat lies in owning structured, permissioned corpora (emails, docs, tickets, CRM data), building deep connectors into enterprise SaaS, and maintaining compliance-grade retrieval pipelines that cannot be replicated through public web data.

Bottom line

With a six-year remedy period, Google has time to shape implementation, entrench its ecosystem, and attempt to adapt compliance on its own terms. Google is narrowing automated access to the discovery layer. The move reflects a deliberate pre-remedy posture designed to fortify Google’s AI ecosystem ahead of regulatory enforcement and cement its control over the foundational data layer of the GenAI stack. Without robust search APIs, competing GenAI assistants will struggle to retrieve real-time information, cementing Google’s edge in a market where grounded reasoning and real-time accuracy are defining advantages. For AI startups, the smart move is to de-risk from raw SERP scraping: shift to licensed sources, strengthen retrieval ops, and invest in first-party data. For everyone else, mark mid-Sept ’25 as the break in your time series and keep shipping.

Endnotes

[1] Search Engine Journal, Google Modifies Search Results Parameter, Affecting SEO Tools, 2025

[2] Search Engine Land, 77% of sites lost keyword visibility after Google removed num=100: Data, 2025

[3] Locomotive, Google Removes &num=100 Parameter: What This Means for Your Website, 2025

[4] Jonathan Clark via LinkedIn, POV: Google’s Removal of &num=100: What It Really Means and What To Do Next, 2025

[5] Search Engine Land, ChatGPT’s answers came from Google Search after all: Report, 2025

[6] Jamie Indigo via LinkedIn

[7] Office of Public Affairs U.S. Department of Justice, Department of Justice Wins Significant Remedies Against Google, 2025

[8] The Batch, Google Must Share Data With AI Rivals, 2025

[9] Office of Public Affairs U.S. Department of Justice, Department of Justice Wins Significant Remedies Against Google, 2025

[10] U.S. Department of Justice, “Plaintiffs’ Remedies Responsive Proposed Findings of Fact,” filed May 29, 2025, in United States et al. v. Google LLC (No. 1:20-cv-03010, D.D.C.).

[11] Search Engine Journal, Google Modifies Search Results Parameter, Affecting SEO Tools, 2025

[12] PPC Land, How SISTRIX adjusted after Google removed the num=100 parameter, 2025

Disclaimer: The information contained herein is provided for informational purposes only and should not be construed as investment advice. The opinions, views, forecasts, performance, estimates, etc. expressed herein are subject to change without notice. Certain statements contained herein reflect the subjective views and opinions of Activant. Past performance is not indicative of future results. No representation is made that any investment will or is likely to achieve its objectives. All investments involve risk and may result in loss. This newsletter does not constitute an offer to sell or a solicitation of an offer to buy any security. Activant does not provide tax or legal advice and you are encouraged to seek the advice of a tax or legal professional regarding your individual circumstances.

This content may not under any circumstances be relied upon when making a decision to invest in any fund or investment, including those managed by Activant. Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by Activant. While taken from sources believed to be reliable, Activant has not independently verified such information and makes no representations about the current or enduring accuracy of the information or its appropriateness for a given situation.

Activant does not solicit or make its services available to the public. The content provided herein may include information regarding past and/or present portfolio companies or investments managed by Activant, its affiliates and/or personnel. References to specific companies are for illustrative purposes only and do not necessarily reflect Activant investments. It should not be assumed that investments made in the future will have similar characteristics. Please see “full list of investments” at https://activantcapital.com/companies/ for a full list of investments. Any portfolio companies discussed herein should not be assumed to have been profitable. Certain information herein constitutes “forward-looking statements.” All forward-looking statements represent only the intent and belief of Activant as of the date such statements were made. None of Activant or any of its affiliates (i) assumes any responsibility for the accuracy and completeness of any forward-looking statements or (ii) undertakes any obligation to disseminate any updates or revisions to any forward-looking statement contained herein to reflect any change in their expectation with regard thereto or any change in events, conditions or circumstances on which any such statement is based. Due to various risks and uncertainties, actual events or results may differ materially from those reflected or contemplated in such forward-looking statements.